Machine learning models are created step-by-step. Gather data, train, validate; that kind of thing. Those steps, taken together, make up a pipeline, and pipelines are at the heart of every machine learning project. But building and maintaining these pipelines can be cumbersome — so how can we make life easier?

In this blog series, we’ll show you the ins and outs of one pipelining tool in particular, ZenML. We’ll discuss how it works, what makes it different from other pipelining tools, and how it helps you to manage complex training workflows.

There will be three parts to this series:

- Getting started: First, we’ll set up an initial pipeline that trains a minimum viable model.

- Experiments: Next, we'll add experiment tracking to our pipeline and iterate on our model to improve its accuracy.

- Model serving: Finally we'll deploy the model as a server so it can receive audio in real-time.

To go with the series we’ve put together an example project that trains a model to recognise simple spoken commands. We will use this to build an Amazon Alexa-style digital assistant. You can check out the Git repo here.

Obstacles in the road

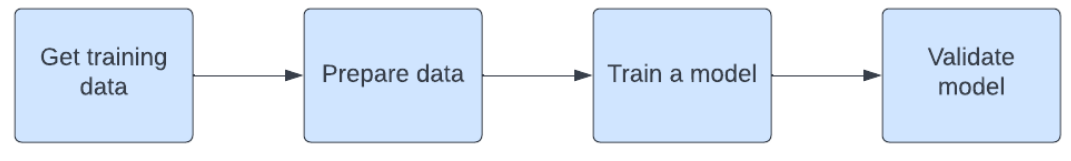

Before getting into ZenML itself, let’s take a look at what goes on inside a pipeline. This will give us the context to understand what problems ZenML is solving and how it solves them.

Taking our speech recognition model as an example, we need to:

Get training data: we’ve got a bunch of audio recordings which we maintain in DVC. This lets us track different versions of the data, and makes it easy for people to collaborate on that data. (see the Fuzzy Labs’ guide to data version control to learn more).

Prepare data: before training the model we transform the audio into spectrograms. We use a Python library called Librosa to do this. In addition, as per common practice, we split the data into a train and test set.

Fit the data to a model: we use TensorFlow to train an LSTM model; a reasonable starting point for speech recognition. We don't worry at first how good the model is, because we will improve it later on.

Validate model: use the test dataset to measure the model’s performance.

These steps are all straightforward. If we put them all into a Python script then that would already be a pipeline of sorts. But there are a number of features that a proper pipelining tool gives us that we don’t get from this just a Python script approach:

Reproducibility: Anybody should be able to train this model, on any machine. To do this means we need a reproducible environment to run the pipeline in, which includes our Python dependencies (TensorFlow, LibRosa), along with the right code and data versions.

Note how every ML pipeline will have dependencies that are unique to the application. Because we’re working with audio, we need Librosa; if we were working with images, we might want OpenCV, and so on.

Parameterisation: Each time we iterate on the model, we want to change one thing and then observe how the model behaves. A pipelining tool lets us run a pipeline with different parameters, without having to modify the code each time.

Tracking: Pipelining tools keep track of each pipeline run, so we can always go back and see historical runs. Note there’s some overlap between this kind of tracking, and experiment tracking. We’ll get into this more in the next blog, but in short, pipeline tools always track basic information about the pipeline steps, and experiment trackers capture a much richer set of information about all aspects of the model.

So when we dig into the details, there’s quite a lot that we need, even for a simple pipeline. Now, let’s look at how ZenML approaches pipelines.

Building a pipeline in ZenML

ZenML is an extensible open source MLOPs framework for creating reproducible pipelines. There are a lot of tools in the MLOps space which support pipelines, but two things stand out in Zen’s approach:

- A focus on providing a really well-engineered Python library to help data scientists write good pipelines.

- Leaving everything else to 3rd party integrations. ZenML can run pipelines anywhere, serve models anywhere, store artifacts anywhere (and so on; you get the idea).

When you install ZenML, you get two things: a Python library for writing pipelines, and a command line tool for configuring and running your pipelines.

So what does a ZenML pipeline look like?

We start by defining each of our steps as a Python function. Here’s a simplified outline of the four steps needed for our audio model:<pre><code>@step

def get_words() -> Output(words=np.ndarray):

...

@step

def load_spectrograms_from_audio(

words: np.ndarray

) -> Output(X_train=np.ndarray, X_test=np.ndarray, y_train=np.ndarray, y_test=np.ndarray):

...

@step

def lstm_trainer(

X_train: np.ndarray,

y_train: np.ndarray,

timesteps: int,

) -> Model:

...

@step

def keras_evaluator(

X_test: np.ndarray,

y_test: np.ndarray,

model: tf.keras.Model,

) -> Output(

loss=float, accuracy=float

):

...

</code></pre>

(N.b. we’ve left out the implementation of each step, but you can view all the pipeline code here).

The first thing to note is the @step annotation, provided by ZenML. This is how ZenML identifies a step function.

ZenML requires us to use Python’s new type annotations on each step function. This not only makes it easy when we read the code to understand how information flows through a pipeline, it also helps us avoid a lot of common coding errors, as errors can be caught by the type checker before the pipeline runs.

Each step has an output. For instance, the train step outputs a model, and the evaluate step outputs loss and accuracy. ZenML keeps a record of every output associated with a pipeline run, which enables us to go back and inspect these later on.

So we've defined our steps. Next, we need to combine these steps into a pipeline:<pre><code>@pipeline(requirements_file="pipeline-requirements.txt")

def train_and_evaluate_pipeline(get_words, spectrogram_producer, lstm_trainer, keras_evaluator):

... connect up each step ...

p = train_and_evaluate_pipeline(

get_words=get_words(),

spectrogram_producer=load_spectrograms_from_audio(),

lstm_trainer=lstm_trainer(config=LSTMConfig()),

keras_evaluator=keras_evaluator()

)

p.run()

</code></pre>

There are three parts to this:

A generic pipeline: defined using the @pipeline annotation. This will be our generic template for a pipeline. It says, for example, that we need a get_words step, but it doesn’t tie us to a specific implementation of that step.

The pipeline instance: here we build an actual pipeline by combining our steps together. We can create as many different pipelines as we like. For example, imagine we had two alternative training steps, and wanted to compare them. We can very easily create two pipelines for doing this.

Running the pipeline: finally, we just invoke .run() on our pipeline.

Why ZenML?

As we mentioned earlier, there are some concepts that turn out to be really important for pipelines.

The first is reproducibility. By writing really clear, modular pipelines, we can efficiently re-run a pipeline many times over. ZenML not only encourages this clear programming style, it also helps us to capture pipeline dependencies, which we’ve done by adding a special PIP requirements file (pipeline-requirements.txt).

Recall how earlier we mentioned that our audio model depends on a specific audio processing library; once ZenML knows about this, it will be make the library available whenever the pipeline runs.

The pipeline that we’ve written can be run on any data scientist’s machine, and it will always do the same thing, produce the same model. It can also run using a dedicated model training environment, like KubeFlow, which you might do if you wanted more compute power than your own machine has. You don’t need to modify your pipeline in any way to do this; ZenML figures out how to run the pipeline in whatever target environment you’re using.

The notion of writing a pipeline once and running it anywhere is one of the unique things about ZenML. It means your pipelines are decoupled from your infrastructure, which in turn enables a data scientist to focus just on the pipeline, without worrying about what infrastructure it will run on.

Parameterisation in ZenML works in a couple of ways. This is something we’ll get into properly in the next blog, on experiment tracking. But in short, every time a pipeline runs, we can provide a configuration, so that we can tweak certain things without modifying the code.

One final thing which we’ve alluded to but not mentioned in detail yet is ZenML’s 3rd party integrations. The philosophy behind ZenML is to provide the right abstractions that allow you to write good pipelines, and let everything else be handled by integrations.

This means that not only can the pipeline itself run on a variety of environments, but also that you can tie different experiment trackers, model serving frameworks, monitoring tools, and artifact stores into a pipeline.

Currently, the list of integrations includes all major ML frameworks (PyTorch, TensorFlow, etc), KubeFlow and Airflow for pipeline orchestration, all 3 cloud providers for artifact storage, MLFlow for model serving, Evidently for monitoring. You can see the full list of integrations here.

What's Next?

This is the first blog in a series exploring ZenML in a real-world use case. In the next blog, we’ll add experiment tracking to our pipeline, which will allow us to iterate on our model to improve its performance.

Then, in the third installation of this series, we’ll explore the deployment of the models through pipelines. Having iterated our model into a reasonable shape we will deploy it, and try it out on some real inputs.